FOR IMMEDIATE RELEASE

Machine Learning Aids Earthquake Risk Prediction

Geotechnical engineers develop new framework for understanding soil liquefaciton using the DesignSafe Cyberinfrastructure

Sink holes and liquefaction on roads in Christchurch, New Zealand after 2011 earthquake. Image: Martin Luff, CC BY-SA 2.0, via Wikimedia Commons

Austin, Texas, June 25, 2021 Our homes and offices are only as solid as the ground beneath them. When that solid ground turns to liquid as sometimes happens during earthquakes it can topple buildings and bridges. This phenomenon is known as liquefaction, and it was a major feature of the 2011 earthquake in Christchurch, New Zealand, a magnitude 6.3 quake that killed 185 people and destroyed thousands of homes.

An upside of the Christchurch quake was that it was one of the most well-documented in history. Because New Zealand is seismically active, the city was instrumented with numerous sensors for monitoring earthquakes. Post-event reconnaissance provided a wealth of additional data on how the soil responded across the city.

Its an enormous amount of data for our field, said post-doctoral researcher, Maria Giovanna Durante, a Marie Sklodowska Curie Fellow previously of The University of Texas at Austin (UT Austin). We said, If we have thousands of data points, maybe we can find a trend.

Durante works with Prof. Ellen Rathje, Janet S. Cockrell Centennial Chair in Engineering at UT Austin and the principal investigator for the National Science Foundation-funded DesignSafe Cyberinfrastructure, which supports research across the natural hazards community. DesignSafe is part of the Natural Hazards Engineering Research Infrastructure, NHERI, also funded by NSF.

Rathjes personal research on liquefaction led her to study the Christchurch event. She had been thinking about ways to incorporate machine learning into her research and this case seemed like a great place to start.

For some time, I have been impressed with how machine learning was being incorporated into other fields, but it seemed we never had enough data in geotechnical engineering to utilize these methods, Rathje said. However, when I saw the liquefaction data coming out of New Zealand, I knew we had a unique opportunity to finally apply AI techniques to our field.

The two researchers developed a machine learning model that predicted the amount of lateral movement that occurred when the Christchurch earthquake caused soil to lose its strength and shift relative to its surroundings.

The results were published online in Earthquake Spectra on April 2021.

Its one of the first machine learning studies in our area of geotechnical engineering, Durante said.

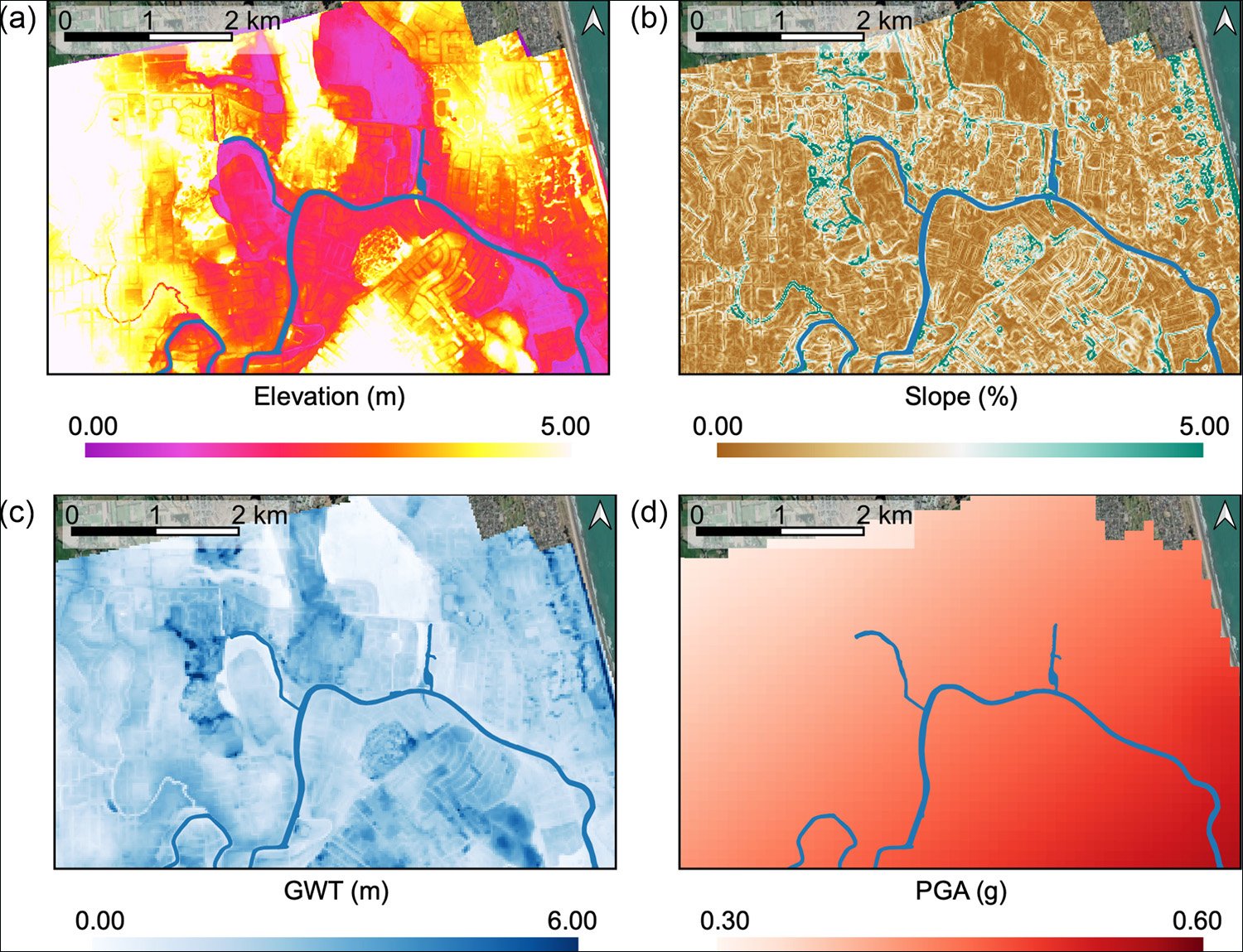

Durante and Rathje trained the model using data related to the peak ground shaking experienced (a trigger for liquefaction), the depth of the water table, the topographic slope, and other factors. In total, more than 7,000 data points from a small area of the city were used for training data a great improvement, as previous geotechnical machine learning studies had used only 200 data points.

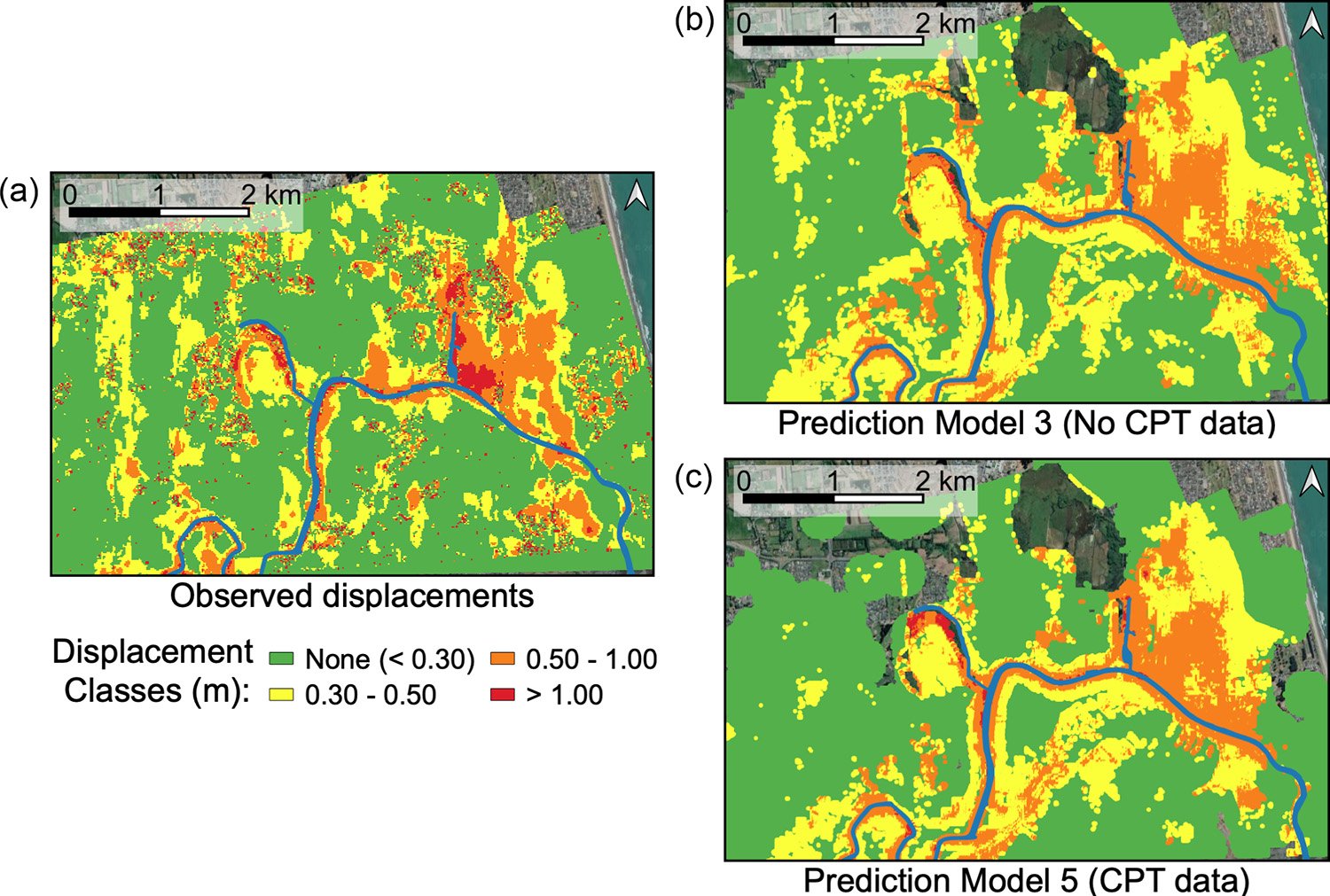

They tested their model citywide on 2.5 million sites around the epicenter of the earthquake to determine the displacement. Their model predicted whether liquefaction occurred with 80% accuracy; it was 70% accurate at determining the amount of displacement.

Spatial distribution of geometric and event-specific input features considered in ML models: (a) ground elevation, (b) ground slope, (c) ground water table depth, and (d) peak ground acceleration. Credit: Maria Giovanna Durante and Ellen M. Rathje

Large-scale lateral spreading displacement maps for the 22 February 2011 Christchurch earthquake. (a) Displacements observed from optical image correlation (after Rathje et al., 2017b), and displacements predicted by Random Forest (RF) classification models using (b) Model 3 (No CPT data) and (c) Model 5 (CPT data). Credit: Maria Giovanna Durante and Ellen M Rathje

Sharing, Reproducibility, and Access

For Rathje, Durante, and a growing number of natural hazard engineers, a journal publication is not the only result of a research project. They also publish all of their data, models, and methods to the DesignSafe portal, a hub for research related to the impact of hurricanes, earthquakes, tsunamis, and other natural hazards on the built and natural environment.

We did everything on the project in the DesignSafe portal, Durante said. All the maps were made using QGIS, a mapping tool available on DesignSafe, using my computer as a way to connect to the cyberinfrastructure.

For their machine learning liquefaction model, they created a Jupyter notebook an interactive, web-based document that includes the dataset, code, and analyses. The notebook allows other scholars to reproduce the teams findings interactively, and test the machine learning model with their own data.

It was important to us to make the materials available and make it reproducible, Durante said. We want the whole community to move forward with these methods.

This new paradigm of data-sharing and collaboration is central to DesignSafe and helps the field progress more quickly, according Joy Pauschke, program director in NSFs Directorate for Engineering.

Researchers are beginning to use AI methods with natural hazards research data, with exciting results, Pauschke said. Adding machine learning tools to DesignSafes data and other resources will lead to new insights and help speed advances that can improve disaster resilience.

Leveraging supercomputing at UT Austin

The researchers used the Frontera supercomputer at the Texas Advanced Computing Center (TACC), one of the worlds fastest, to train and test the model. TACC is a key partner on the DesignSafe project, providing computing resources, software, and storage to the natural hazards engineering community.

Access to Frontera provided Durante and Rathje machine learning capabilities on a scale previously unavailable to the field. Deriving the final machine learning model required testing 2,400 possible models.

It would have taken years to do this research anywhere else, Durante said. If you want to run a parametric study, or do a comprehensive analysis, you need to have computational power.

Advances in machine learning require rich datasets, precisely like the data from the Christchurch earthquake. All of the information about the Christchurch event was available on a website, Durante said. Thats not so common in our community, and without that, this study would not have been impossible.

Media Contacts

Aaron Dubrow

Texas Advanced Computing Center

University of Texas at Austin

aarondubrow@tacc.utexas.edu

512-820-5785

About the Natural Hazards Engineering Research Infrastructure

Funded by the National Science Foundation, the Natural Hazards Engineering Research Infrastructure, NHERI, is a network of experimental facilities dedicated to reducing damage and loss-of-life due to natural hazards such as earthquakes, landslides, windstorms, and tsunamis and storm surge. It is supported by the DesignSafe Cyberinfrastructure. NHERI provides the natural hazards engineering and social science communities with the state-of-the-art resources needed to meet the research challenges of the 21st century. NHERI is supported by multiple awards from NSF, including the NHERI Network Coordination Office, Award #1612144.