Purdue Research Aims to Accelerate Disaster Reconnaissance Data Use

Published on April 7, 2017

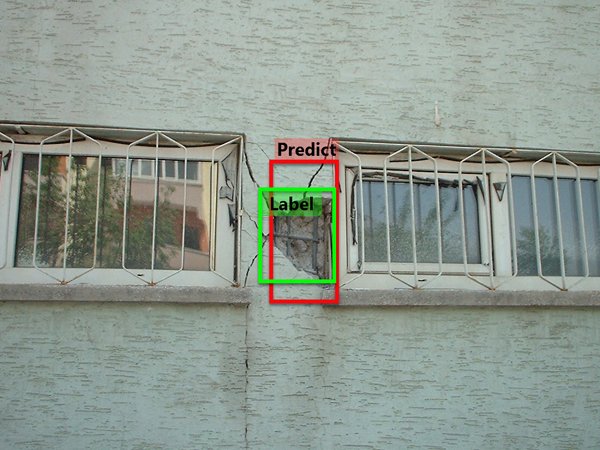

The automated system indentifies structural damage on images collected from the 2003 Bingöl earthquake in Turkey. The green box with the tag "Label" denotes a true spalling damage area. The red box with the tag of "Predict" is the estimate provided by the new system. This automated damage assessment system could dramatically reduce the time it takes for engineers to assess damage to buildings after disasters. (Image source: DataHub.)

To streamline the grueling task of post-disaster data collection and organization, Purdue researchers seek to use computers to automate the process.

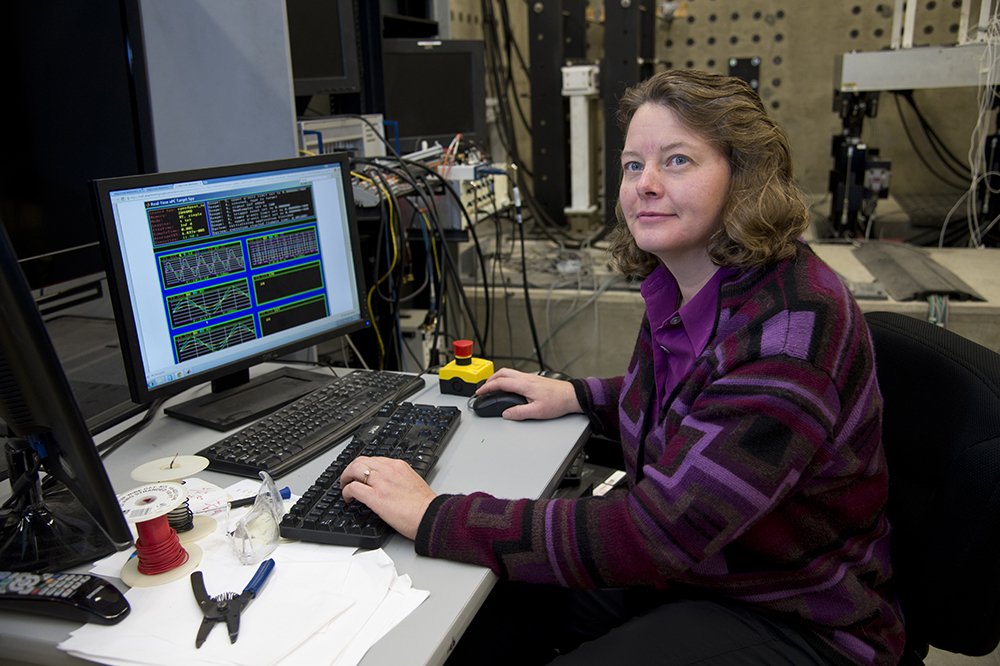

Shirley Dyke, Purdue Professor of Civil and Mechanical Engineering, and Chul Min Yeum, post-doctoral researcher, are developing deep learning algorithms to classify images and identify regions of concern.

"Rather than having teams spend several hours trying to organize their data and figure out how to collect essential data during the next day, we'd rather enable doing this automatically and rapidly with our algorithms," she says.

"We want to automatically determine if an instance of structural damage is there, and understand what additional data should be collected in the affected community. This work will allow teams to collect more data and images, knowing that it can be made available for use."

Dykes project builds on work by Yeum both in computer-vision technologies and deep learning methods. Deep learning draws on neural network techniques and large data sets to create algorithms and design classifiers for assessing the data.

Deep learning has been applied to many everyday image classification situations, but as far as we know, were the first to employ it for damage assessment using a large volume of images, Yeum says.

Training the system requires a painstaking process of manually labeling tens of thousands of images from diverse data sets according to a chosen schema. The computer system learns about the contents and features of each class, and then it trains the algorithms to find the best ways to identify the areas of interest.

So far, Dyke and Yeum have gathered about 100,000 digital images for training the system, contributed from researchers and practitioners around the world. Most of these are from past earthquakes, but other hazard images from tornadoes and hurricanes also are collected.

We will seek opportunities to test our system in the field, perhaps by working with researchers who are examining the tens of thousands of buildings exposed to significant ground motions in Italy in the past months, Dyke says.

The classifiers trained using our database of past images would be applied to new images collected on site to directly support teams in the field.

As we develop the methodologies to automatically organize the data, we are engaging researchers who deal with reconnaissance missions and building codes, Dyke says, so that these classifiers can be used by people around the world.

Project: CMMI 1608762 CDS&E: Enabling Time-critical Decision-support for Disaster Response and Structural Engineering through Automated Visual Data Analytics

Source: Purdue University

Shirley Dyke, professor of Civil and Mechanical Engineering at Purdue, is working on deep learning algorithms to classify images and identify regions of concern. (Photo: Purdue University)

NHERI Quarterly

Spring 2017

NHERI@UTexas Microtremor Stations: Revolutionary Work in Garner Valley

High-Achieving Wind Researcher Elawady Joins FIU

Purdue Research Aims to Accelerate Disaster Reconnaissance Data Use

University of Florida Terraformer on Science Nation